Project Summary

What are teleprepresence devices?

Telepresence devices use robotic platforms equipped with sensors and communication tools to execute tasks remotely. Commercially-available models typically offer limited mobility and interaction capabilities. Many lack a means of allowing the user to physically interact with the remote environment and are not designed to be accessible by people with disabilities, barring them from effectively using this up-coming technology.

What is iTAD?

Intelligent Telepresence Assistive Device (iTAD) is a robotic platform which facilitates real-time communications and remote interactions in a variety of application areas. iTAD aims to address technological barriers which can prevent people with disabilities from accessing the healthcare they need or communicating/interacting with loved ones in independent living centres. iTAD can make healthcare more accessible by connecting remote medical professionals and patients, including those that are permanently or temporarily disabled, as well as assist nurses, other doctors and family members. In independent living facilities, iTAD can aid the elderly with different tasks such as walking, eating, administering medications, and remotely communicating with healthcare professionals. iTAD’s robust design and versatility offers a cost-effective solution for addressing a variety of scenarios that help connect individuals and facilitate interactions. In addition, iTAD is designed to safely fit within dynamic hospitals and independent living facilities, requiring no changes to be made to existing infrastructures.

What are examples of iTAD use cases?

- Doctor visiting a patient across the country or globe for a consultation visit

- Nurse assisting a child with cerebral palsy by holding or lifting an object via iTAD

- Son connecting with his elderly parents and assisting them at their independent living facility

- Deaf or hard of hearing individual communicating and interacting with family members in a different country

- iTAD accompanying a patient travelling to their hospital room using autonomous navigation

What are iTAD’s features?

The head is compromised of the tablet, camera, and LiDAR (Light Detection and Ranging) sensor. An end-user is able to engage in natural-feeling interactions thanks to the centralized head structure making it possible to look at the tablet while looking into the camera. The tablet streams the live audiovisual stream from the desktop application. Facial detection software is used to detect the largest face in a frame, allowing the camera to follow it by using the camera’s pan and tilt functions, preventing the remote user from needing to constantly readjust the camera during a conversation. The camera’s pan and tilt functions and the removable tablet allow people at different heights to accessibly communicate through iTAD. In addition, the head is able to rotate via the neck structure, allowing users to easily address someone not directly in front of iTAD. The LiDAR sensor enables iTAD to map its surrounding area for autonomous navigation.

The highly configurable nature of iTAD’s communication platform allows for the addition of features to ensure that iTAD is compliant with accessibility standards; these include:

- Voice commands for the main functions of the robot, such as start-up and autonomous navigation

- Filters to assist the visually impaired and individuals who lip-read to view video feeds

- Real-time subtitles via voice-to-text converters and text-to-voice converters to allow effective communication with deaf or hard-hearing individuals

Arm: Increasing iTAD’s Functionality

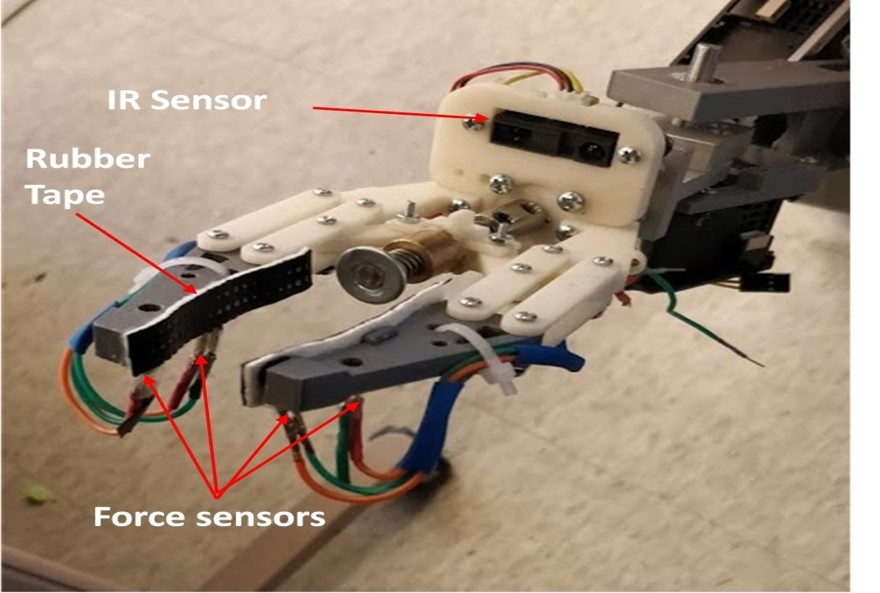

The communication experience is further enhanced with the use of a 5 degree-of-freedom (dof) arm, allowing the user to physically interact with a large area within its surroundings. The position of the end-effector, or gripper, can be intuitively controlled by toggling between arm and drivetrain control on the main controller. The versatile gripper design allows effective grasping of objects with different dimensions and surface finishes. The gripper is instrumented with force sensors and proximity infrared sensors to safely grasp objects and push items. Also, the gripper can be equipped with diagnostic tools, such as a heart rate sensor, to assist remote doctors with patient check-ups.

The arm can assist people with disabilities by performing various tasks; examples include:

- Lifting light objects

- Moving IV poles

- Flicking light switches

- Pushing elevator buttons (also essential for autonomous navigation capabilities)

Showcase: Autonomous Navigation

To navigate autonomously, a LiDAR (Light Detection and Ranging) sensor maps iTAD’s surroundings, identifying where it is and calculating a path to get to its destination autonomously, by referencing a larger map of the environment.

The video shown demonstrates iTAD’s current mapping abilities. As iTAD, denoted by the right-angle-connected red and green lines, moves throughout a house, it maps the house’s barriers, shown as the black lines, as well as iTAD’s trajectory, shown as the bright green line. Path planning algorithms, such as A*, are then applied to plan a path from iTAD’s current location to a desired location chosen by a user on the map.

What is iTAD’s current status?

- Structural components designed, manufactured and assembled

- Functional drivetrain with customizable controller inputs

- Facial detection and recognition, showcased in the video. The arrow points from the centre of the frame to the centre of the largest face, visualizing how iTAD’s camera will move and focus.

- Prototype arm with instrumented gripper constructed

- Framework for autonomous navigation developed

The modular nature of iTAD allows for adding useful functionalities to increase iTAD’s impact.