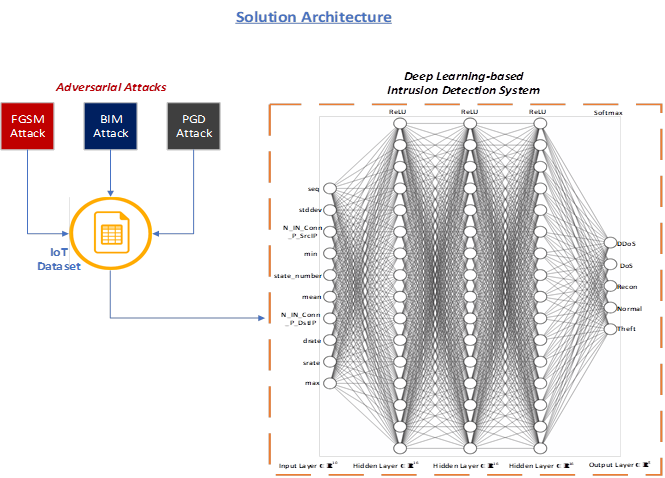

This project enables us to demonstrate the effects of adversarial samples on deep learning-based intrusion detection systems within the context of IoT networks. Our experimental results illustrate the vulnerability of deep learning-based intrusion detection systems. It also provides a performance comparison between the feed forward neural networks and the self-normalizing neural networks. Finally, the effect of feature normalization on the adversarial robustness of deep learning based intrusion detection systems is analyzed.

Project Description:

The NGN laboratory is actively researching into the intersection of Network Security and Artificial Intelligence (AI) techniques such as Deep Learning. In this research project, we analyze the adversarial risks of applying deep learning methods to detect and mitigate security threats against IoT networks.

An adversarial attack on a deep learning model occurs when an adversarial example is fed as an input to the deep learning model. An adversarial example is an instance of the input in which some feature has been intentionally perturbed with the intention of confusing the deep learning model to produce a wrong prediction.

Fig. 2 Comparison of FNN and SNN architectures

We evaluate two types of deep learning models. A feed forward artificial neural network (FNN) and a self-normalizing neural network (SNN). In our experimental setup, we photo two network intrusion detection systems using each variant of the deep learning model. The intrusion detection systems are then used to analyze network attack traffic of the BoT-IoT dataset from the Cyber Range Lab of the center of UNSW Canberra Cyber. [1.] Olakunle Ibitoye, M. Omair Shafiq and Ashraf Matrawy, “Analyzing Adversarial Attacks Against Deep Learning for Intrusion Detection in IoT Networks”, in Proc. of the IEEE Global Communications Conference (GLOBECOM 2019), (Accepted) Waikoloa, HI, USA, 9 – 13 December 2019.

Researcher

Olakunle Ibitoye

Supervisors

Ashraf Matrawy M. Omair Shafiq