Our group studies Machine Learning (ML) in network security (as shown in the figure below) and the security of machine learning. Below are some of our projects in this area:

Machine Learning Applications in Network Security [1]

Surveying Adversarial Attacks against Networks Security [1]

Machine learning enhances decision support systems in network security but faces unique adversarial threats. Our survey examines and classifies adversarial attacks against ML in network security, analyzing both attack methods and defenses. It provides taxonomies of ML techniques and security applications, introduces an adversarial risk grid map, and evaluates existing attacks using this framework. The study highlights the ongoing arms race between attackers and defenders in ML-based network security, offering insights into the complex landscape of adversarial machine learning in this domain.

Studying and Testing Adversarial Training to Increase ML Robustness against Adversarial Attacks [2][3][4]

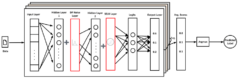

Our projects investigate the effectiveness of various evasion attacks and propose training resilient ML models using different neural networks. We employ a min-max formulation to enhance robustness against adversarial samples. Our experiments show that adversarial training with this formulation significantly strengthens the network against state-of-the-art attacks.

IDS prototype Architecture with the inner-maximizer that generate adversarial samples and the outer-minimizer that reduce False Negative (FN) rates and increase robustness [2]

Studying the practicality of adversarial attacks against network intrusion detection systems and the impact of dynamic learning on them. [5][6]

Our projects examine the practicality of adversarial attacks against ML-NIDS, offering numerous contributions such as identifying practicality issues with an attack tree threat model, creating a taxonomy of these issues, shown in the figure below, and exploring how real-world ML model dynamics affect adversarial attacks. Our findings show that continuous re-training, even without adversarial training, can reduce attack effectiveness, emphasizing the gap between research and real-world application.

Taxonomy of Practicality Issues of Adversarial Attacks Against ML-NIDS [5]

Introducing Adaptive Continuous Adversarial Training (ACAT) to Enhance Machine Learning Robustness [7][8]

Adversarial training boosts the robustness of ML models against attacks, but obtaining labeled training and adversarial data in network security is costly and difficult. Our group presented Adaptive Continuous Adversarial Training (ACAT), a method that incorporates adversarial samples into the model during continuous learning using real-world detected data. Experiments with NIDS and SPAM detection datasets show that ACAT reduces detection time and improves the accuracy of an under-attack ML model.

ACAT Architecture [7]

Differentially private self-normalizing neural networks for adversarial robustness in federated learning [9]

Federated Learning has proven to help protect against privacy violations and information leakage. However, it introduces new risk vectors which make Machine Learning models more difficult to defend against adversarial samples. We consider the problem of improving the resilience of Federated Learning to adversarial samples without compromising the privacy-preserving features that Federated Learning was primarily designed to provide. Common techniques such as adversarial training, in their basic form are not well suited for a Federated Learning environment. Our study shows that adversarial training while improving adversarial robustness, comes at the cost of reducing the privacy guarantee of Federated Learning. We introduce DiPSeN, a Differentially Private Self-normalizing Neural Network that combines elements of differential privacy, self-normalization, and a novel optimization algorithm for adversarial client selection. Our empirical results on publicly available datasets for intrusion detection and image classification show that DiPSeN successfully improves adversarial robustness in Federated Learning while maintaining the privacy-preserving characteristics of Federated Learning.

DiPSeN Architecture [9]

Introducing Perturb-ability Score (PS) to Enhance Robustness Against Evasion Adversarial Attacks on ML-NIDS [10]

Our group proposed a novel Perturb-ability Score (PS) to identify Network Intrusion Detection Systems (NIDS) features that can be easily manipulated by attackers. We demonstrate that using PS to select only non-perturb-able features for ML-based NIDS maintains detection performance while enhancing robustness against adversarial attacks.

References:

[1] Ibitoye, Olakunle, Rana Abou-Khamis, Mohamed el Shehaby, Ashraf Matrawy, and M. Omair Shafiq. “The Threat of Adversarial Attacks on Machine Learning in Network Security–A Survey.” arXiv preprint arXiv:1911.02621 (2019). https://arxiv.org/abs/1911.02621

[2] Abou Khamis, Rana, M. Omair Shafiq, and Ashraf Matrawy. “Investigating resistance of deep learning-based ids against adversaries using min-max optimization.” In ICC 2020-2020 IEEE international conference on communications (ICC), pp. 1-7. IEEE, 2020. https://ieeexplore.ieee.org/abstract/document/9149117

[3] Abou Khamis, Rana, and Ashraf Matrawy. “Evaluation of adversarial training on different types of neural networks in deep learning-based idss.” In 2020 international symposium on networks, computers and communications (ISNCC), pp. 1-6. IEEE, 2020. https://ieeexplore.ieee.org/abstract/document/9297344

[4] Abou Khamis, Rana, and Ashraf Matrawy. “Could Min-Max Optimization be a General Defense Against Adversarial Attacks?.” In 2024 International Conference on Computing, Networking and Communications (ICNC), pp. 671-676. IEEE, 2024. https://ieeexplore.ieee.org/abstract/document/10556266

[5] Shehaby, Mohamed el, and Ashraf Matrawy. “Adversarial Evasion Attacks Practicality in Networks: Testing the Impact of Dynamic Learning.” arXiv preprint arXiv:2306.05494 (2023). https://arxiv.org/abs/2306.05494

[6] ElShehaby, Mohamed, and Ashraf Matrawy. “The Impact of Dynamic Learning on Adversarial Attacks in Networks (IEEE CNS 23 Poster).” In 2023 IEEE Conference on Communications and Network Security (CNS), pp. 1-2. IEEE, 2023. https://ieeexplore.ieee.org/abstract/document/10288658

[7] ElShehaby, Mohamed, Aditya Kotha, and Ashraf Matrawy. “Introducing Adaptive Continuous Adversarial Training (ACAT) to Enhance Machine Learning Robustness.” IEEE Networking Letters (2024). https://ieeexplore.ieee.org/abstract/document/10634900

[8] ElShehaby, Mohamed, Aditya Kotha, and Ashraf Matrawy. Adaptive Continuous Adversarial Training (ACAT) to Enhance ML-NIDS Robustness. TechRxiv (2024). https://www.techrxiv.org/users/850633/articles/1237424-adaptive-continuous-adversarial-training-acat-to-enhance-ml-nids-robustness

[9] Ibitoye, Olakunle, M. Omair Shafiq, and Ashraf Matrawy. “Differentially private self-normalizing neural networks for adversarial robustness in federated learning.” Computers & Security 116 (2022): 102631. https://www.sciencedirect.com/science/article/pii/S016740482200030X?casa_token=ipMgs1X8HaYAAAAA:PkOw519M0AtGDn9QDpPnDmhMKo2Ou_NFvev8ikw0GF1zaaUht3FJCi60_rg03eyDE0SIOOogo5Vb

[10] elShehaby, Mohamed, and Ashraf Matrawy. “Introducing Perturb-ability Score (PS) to Enhance Robustness Against Evasion Adversarial Attacks on ML-NIDS.” arXiv preprint arXiv:2409.07448 (2024). https://arxiv.org/abs/2409.07448