Kavita Mistry is a PhD candidate in the Department of History

If you have seen me walking around campus, you will know me as the “tiny girl with the massive backpack” because I carry a 5 lbs gaming laptop everywhere I go. But it’s for good reason. I am returning as a Research Assistant for the Computational Research in the Ancient Near East (CRANE) project. My areas of interests involve using photogrammetry to build 3D models of cultural heritage artifacts, monuments, and now branching out to archaeological sites and architecture.

I was first introduced to photogrammetry in my bachelor’s degree where I conducted a study on the use of photogrammetric stereoplotting for the foundations of an ancient palace at an excavation site. After staring at, and drawing, 15,000 stones on a computer screen through bulky 3D goggles (I know, sounds exhilarating right?), I just knew I had to keep exploring this technology. Throughout my MA my research focused on the art of replicative technology from past to present and I explored modern techniques using photogrammetry to build 3D models to eventually lead to 3D printing. I wanted to explore different ways to bring accessible and haptic experiences to museums spaces, to promote a different kind of learning that could enchant the visitor and engage all of their senses. But there is so much to explore about photogrammetry beyond producing a 3D model and printing it.

Photogrammetry

I’m talking about photogrammetry like it’s a common everyday word. Here is a scholarly definition of photogrammetry:

“the art, science and technology of obtaining reliable information about physical objects and environment through processes of recording, measuring and interpreting photographic images and patterns” (Wolf et al., 2014, 1).

And here is my “photogrammetry for newbies” explanation that makes sense in my head. Photogrammetry uses the process of dense image matching or best point matching. When taking photos of an object, you want to have at least a 75% overlap of images that are also from all different angles and heights. The reason for this is when you throw your images into a program that takes your images and spits out a 3D model, the computer will recognize certain patterns of a single point of the model in multiple images and generate a point in 3D space using geographic references, camera position, and points of intersection. When so many of these points are generated, you can create a mesh, which will connect all the points together in little triangular formations, and from there you can generate faces and textures and voila, a 3D model. Now this is just a very basic description of what happens, but what happens when we let the computer imagine the images from which we generate the photogrammetry?

Generative Adversarial Networks

For XLab, I have had the opportunity to apply my knowledge of photogrammetry to take it further and explore other applications of photogrammetry, which include generative adversarial networks (GANs) and the concept of deep learning. Using GANs means to train two networks, a generator and discriminator, in competition with each other, to learn and recognize patterns within data so as to create synthetic representations of data that could pass for real images (this is in contrast with the method the latest image generators use, ‘diffusion’; this post explains and explores the differences). Through the interaction of these two networks, we can train the machine to recognize certain patterns in the data, in which the machine will produce an image or model of what it has learned in 3D space. After training the machine to generate photographs of an amphitheatre (for instance), I get it to generate images drawn from the latent space – the machine’s understanding of ‘amphitheatre’ – which I then feed into the photogrammetry program.It’s an approach that relies on breaking things, and exploring the ‘failure modes’, to understand something of how computers see and even ‘understand’ archaeology.

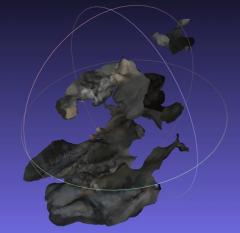

Machinic Vision of Modern Photos of Amphitheatres

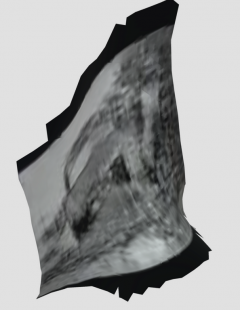

Machinic vision of historical photographs of amphitheatres

The alien blob that we see at left is actually a representation of what the machine has learned about an amphitheatre, from modern tourist photos at Pompeii. The other image is from early 20th century photography of amphitheatres from Italy. To us neither one looks like any sort of structure, but to the machine it makes some sort of sense. And indeed, when we compare the two, there is clearly something different going on between the modern photography and the historical photography; the machine’s imagination lets us surface subtle differences which deform our expectations and so we return to our source data with new perspectives and questions to ask!

For someone who is terrified of artificial intelligence because of the movie iRobot, I’ve come a long way: here I am, training a computer to see patterns and recognize archaeological features and structures the way a human would, all in the name of good research!

Intersections of Photogrammetry and RTI

In this position, I am also looking at intersections between photogrammetry and reflective transformation imaging (RTI), to see how we can mesh the two techniques together to create high resolution images and models that can be used further for research, conservation, and interactive purposes. While this is in its preliminary literature review, there has already been some promising insight on using photogrammetry and RTI together.

Conclusion

As my MA research focused primarily on the art of 3D digitization and 3D printing, this research position will help me throughout my PhD, as I venture into exploring the ethical implications of this technology as a technique for replication of cultural heritage materials and monuments. My philosophy has always been to understand the technology first before applying it in the real world. By understanding the crooks and nannies and breakages of the technology, how it works and what it can produce, what happens when it fails, I can gain more insight on how to use this technology ethically in the museum and cultural heritage fields.

Bibliography:

Wolf, Paul R., Bon A. Dewitt, and Benjamin E. Wilkinson. Elements of Photogrammetry with Applications in GIS. McGraw-Hill Education, 2014.