A version of this post was originally put on Shawn Graham’s Electric Archaeology

Cap’n Picard knows how to work the computer.

What if you could do digital archaeology by simply describing what you wanted to happen? Or any kind of cultural heritage informatics work? Like the computer in Star Trek, what if you could just describe what you want to have happen, and the computer figures it out? The potential of what is currently being marketed as ‘AI’ is really a kind of autocorrect-on-steroids-to-the-nth degree. Not as snappy as ‘AI’, but basically: if it knows what a ‘completion’ looks like, what the desired end goal should be, then maybe these ‘Large Language Models’ (LLM) could be of use to us in the CHI space.

I have avidly been following all the latest developments in the llm scene; one of the best folks to follow is Simon Willison. Simon recently wrote,

A popular nightmare scenario for AI is giving it access to tools, so it can make API calls and execute its own code and generally break free of the constraints of its initial environment.

Let’s do that now!

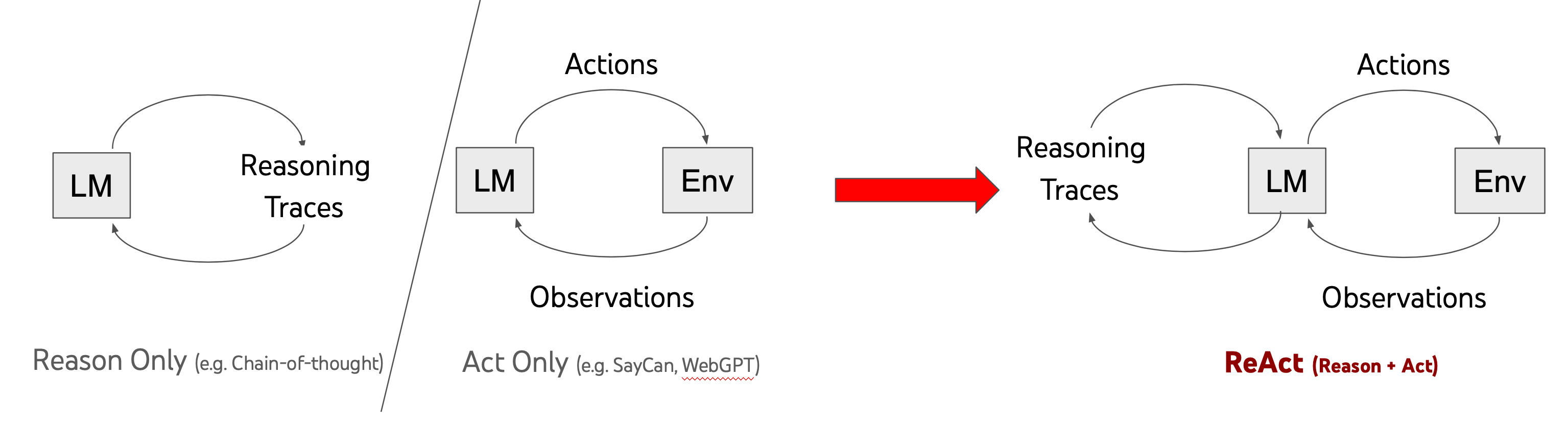

This is called a ‘ReAct’ model, for ‘reason and act’. In a ‘traditional’ gpt3 prompt, you’d give some text and the model would continue to complete the text, collapsing the probability of one letter/token over another based on the prompt text you began with. With more complex elaborations of this, you give it examples of the kind of output you want. LLMs have no access to the real world. That is, until you show them how to use an API. It was described in this paper

Simon made a simple implementation of it; there are other implementations out there, but what I like about Simon’s is that I can follow along and understand what it’s doing. What’s more, I can extend it myself. The prompt is straightforward enough:

prompt = “””

You run in a loop of Thought, Action, PAUSE, Observation.

At the end of the loop you output an Answer

Use Thought to describe your thoughts about the question you have been asked.

Use Action to run one of the actions available to you – then return PAUSE.

Observation will be the result of running those actions.

Your available actions are:

calculate:

e.g. calculate: 4 * 7 / 3

Runs a calculation and returns the number – uses Python so be sure to use floating point syntax if necessary

wikipedia:

e.g. wikipedia: Django

Returns a summary from searching Wikipedia

tailsx_search:

e.g. tailsx_search: Django

Search TailsX for that term

open_context_search:

e.g. open_context_search: Poggio

Search Open Context for that term

Always look things up on Wikipedia if you have the opportunity to do so.

Always look things up on TailsX if you have the opportunity to do so.

If a subject seems to be archaeological look things up on Open Context.

Example session:

Question: What is the capital of France?

Thought: I should look up France on Wikipedia

Action: wikipedia: France

PAUSE

You will be called again with this:

Observation: France is a country. The capital is Paris.

You then output:

Answer: The capital of France is Paris

“””

If this was all it was, it’d just hallucinate and pretend to access the web. The fun happens with the way the control is handled – ‘pause; you will be called again’ – and where you start defining your actions. Here’s one that goes and uses the API for a meta search engine that I added:

def tailsx_search(q):

return httpx.get("https://tailsx.extravi.dev/api", params={

"q": q,

"t": "text"

}).json()

(please excuse the wonky formatting, stupid wordpress)

and for Open Context,

def open_context_search(q):

return httpx.get("https://opencontext.org/query/.json?type=projects&response=uri-meta", params={

"q": q

}).json()

Which allows the chatbot to search Open Context for high-level descriptions of various archaeological projects who have published their data there.

The result:

Ok, that’s a pretty blurry screenshot, sorry. It says –

Thought: I should look this up on Open Context since Nubia is an archaeological site.

Action: open_context_search: Nubia Excavation

PAUSE

-- running open_context_search Nubia Excavation

Observation: []

Thought: It seems like there are no search results for "Nubia Excavation" on Open Context. I'll try searching using different keywords.

Action: open_context_search: Nubia archaeological sites

PAUSE

-- running open_context_search Nubia archaeological sites

Observation: [{'uri': 'http://opencontext.org/projects/2c7ff3c8-b299-4138-ab04-f4e17e49ad64',

'href': 'https://opencontext.org/projects/2c7ff3c8-b299-4138-ab04-f4e17e49ad64',

'citation uri': 'https://doi.org/10.6078/M74747Z0', 'label': 'The Amulets of the Kerma Culture',

'project label': 'The Amulets of the Kerma Culture', 'project href': 'https://opencontext.org/projects/2c7ff3c8-b299-4138-ab04-f4e17e49ad64',

'context label': 'Africa/Sudan', 'context href': 'https://opencontext.org/subjects/2572daff-7242-474a-9b5c-f34943a684b4',

'latitude': 21.22096935789715, 'longitude': 30.88143850649228, 'early bce/ce': -2500.0, 'late bce/ce': -1500.0,

'item category': 'Projects', 'icon': 'https://opencontext.org/static/oc/icons-v2/noun-research-project-2341022.svg',

'snippet': 'The research focused on a systematic comparative analysis, to confirm the apparent differences between the typologies found in the capital city

and those from Kerma <mark>sites</mark> located in the peripheral areas of the Kingdom, such as the <mark>sites</mark> in Upper and Lower <mark>Nubia</mark>,

and in the Fourth Cataract area. \n\nNotes:\n[1] Reisner, G.A. 1923a-b.', 'published': '2007-01-01T00:00:00Z', 'updated': '2023-02-26T20:10:02Z'}]

Observation: The search on Open Context for "Nubia archaeological sites" returned one result.

The project is called "The Amulets of the Kerma Culture" and it focuses on a systematic comparative analysis of amulets found in the capital city of Kerma and

those from peripheral sites of the kingdom, including Upper and Lower Nubia. The project was published in 2007 and updated as recently as February 2023.

So – a chatbot that knows to go to Wikipedia, websearch, or open context, depending on context. Oh, it can also do math. You can try it out here in this Colab Notebook . You’ll need your own OpenAI key. If you look at my colab notebook, I have an experiment where I asked it to identify scholars and scholarship on Pompeii (trying to limit it to what it finds on Wikipedia so that it doesn’t fabricate any scholars) and then write the result as a Cypher query for a Neo4j knowledge graph.

I have another bot in the works, trained on archaeological data from Pompeii (from the Pompeian Artistic Landscapes Project). That bot converts its training into a vector embedding, and currently can only respond based on that archaeological data. I can ask it to compare (for instance) the treatment of Ariadne versus Theseus in Pompeian wall paintings. I want to enhance its abilities with similar agency. It’s built with LangChain; I’ll probably blog about it in due course.

Thank you to Eric Kansa for walking me through some of the Open Context API. None of this is his fault.

Update March 24th

Developments continue apace; OpenAI now has ‘plugins for ChatGPT‘. These are extensions that operate (as I understand things) rather similar to what we did above. By making it relatively straightforward to do this within the API itself, things are going to escalate even faster. The scientific computing engine/platform Wolfram has just released a plugin to tie ChatGPT to their computing resources; it looks pretty… wow. However – it still hallucinates! See Simon Willison’s walk through of how to build one of these plugins on your own data (which we in the XLab will be trying out for sure). I’m excited to try out Simon’s walkthrough, because Simon makes the excellent data publishing tool datasette; I used that tool some time ago to turn the Canada Science and Technology Museum’s online catalogue into an API. It should be trivial to connect that to chatgpt now through the plugin. What would you ask a museum catalogue if you knew it could answer back?

We know these models are fraught with dangers, but this kind of enhanced querying could be extremely useful. We progress with our eyes open…