Current GN&C technologies (robots’ brains) are designed to work with architecturally fixed system models (self-models, body maps), and most of the work to date has focused on GN&C algorithms that can minimize the effects of uncertainty and disturbance in such models. Although these strategies successfully capture some nonlinearities and uncertainties, they fail when topological changes (e.g., damage) happen in the system or its environment. Unlike traditional robots, future robotic systems must be conscious of their body, surrounding environment, and the emerging relations linking the two to be able to adapt quickly and intelligently to changes in and around the system, or in mission requirements. Particularly, long-term reliable autonomy of robotic systems requires adaptability to architectural changes. Therefore, robots’ GN&C systems must be equipped with a set of developmental functions including synthetic emotional investments, with functionalities analogous to the human brain. This research focuses on two themes:

- Developing GN&C systems based on Spiking Neural Networks (SNNs) inspired by the human brain, and extracting robotic adaptability laws by investigating the brain adjustments when interacting with a damaged robot, in different learning conditions.

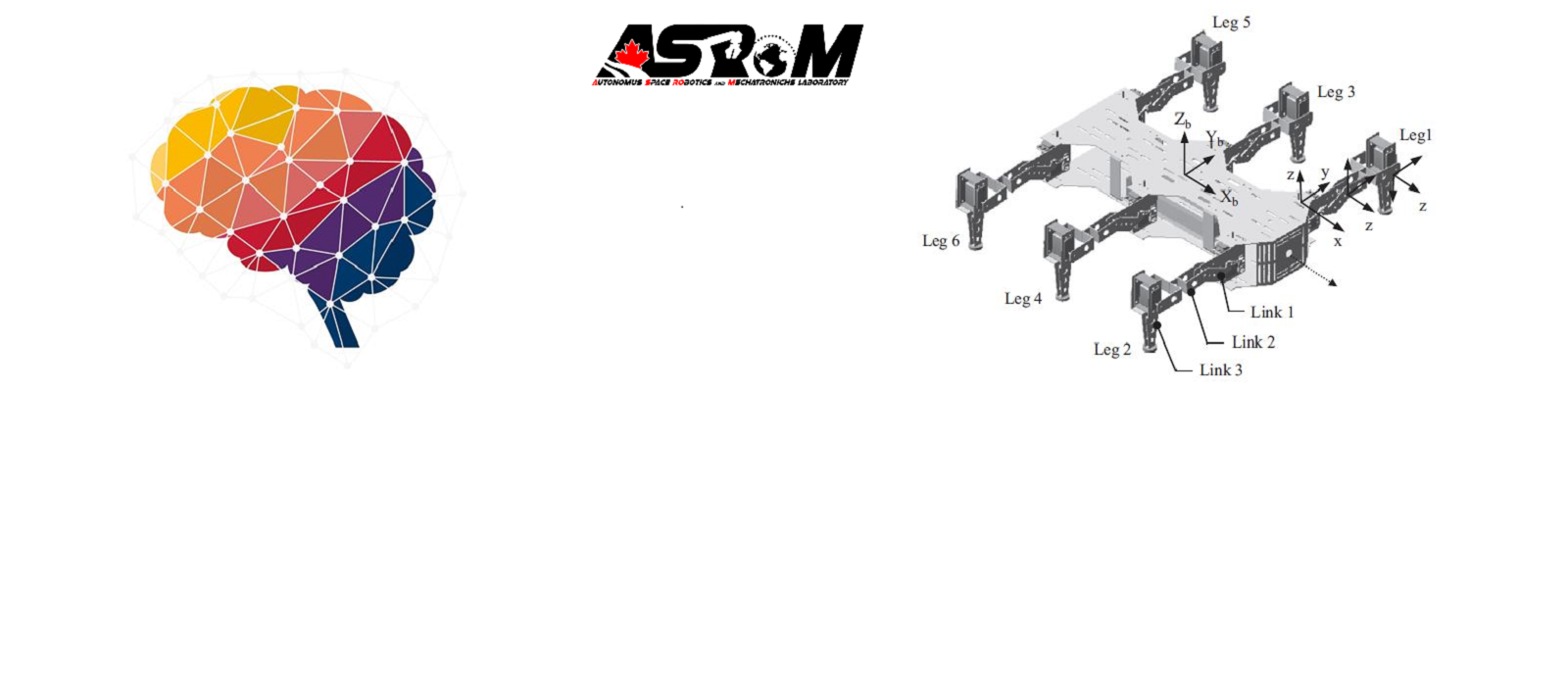

- Developing geometric modular GN&C technologies for reconfigurable robotic systems that can rapidly adapt to topology changes and constraint variations.