One of the key challenges related to any dual-spacecraft missions involving rendezvous and proximity operations maneuvers is the onboard determination of the pose (i.e., relative position and orientation) of a target object with respect to the robotic chaser spacecraft equipped with computer vision sensors. The relative pose of a target represents crucial information upon which real-time guidance, trajectory control, and docking/capture maneuvers are planned and executed. Besides relative pose determination, other computer vision tasks such as detection, 3D model reconstruction, component identification, and foreground/background segmentation are often required.

Before deploying any computer vision algorithms in operational scenarios, it is important to ensure they will perform as expected, regardless of the harsh lightning conditions encountered in low-Earth orbit and even when applied to unknown uncooperative targets. To address the need to train and/or test computer vision algorithms across a wide range of scenarios, conditions, target spacecraft, and visual features, the Spacecraft Robotics Laboratory has developed labeled datasets of images of various target spacecraft, ranging from actual on-orbit imagery to synthetic rendered models.

EVent-based Observation of Spacecraft (EVOS) Dataset

The EVOS (EVent-based Observation of Spacecraft) dataset is designed to support research in event-based vision for autonomous on-orbit inspection and space debris removal. The dataset was collected at Carleton University’s Spacecraft Proximity Operations Testbed using an IniVation DVXplorer Micro event camera mounted on a stationary chaser spacecraft platform which observes a moving target spacecraft. The observed target is covered in multi-layer insulation and is equipped with a solar panel and a docking cone. The dataset contains 15 unique experiments, each approximately 300 seconds long, featuring distinct trajectories under different lighting conditions. Time-synchronized ground-truth position and velocity data are provided via a PhaseSpace motion capture system with sub-millimeter accuracy.

Crain, A., Ulrich, S., “EVent-based Observation of Spacecraft (EVOS) Dataset,” Federated Research Data Repository, 2025. https://doi.org/10.20383/103.01538.

Related Publications

Crain, A., and Ulrich, S., “Event-Based Spacecraft Representation Using Inter-Event-Interval Adaptive Time Surfaces,” 36th AIAA/AAS Space Flight Mechanics Meeting, Orlando, FL, 12-16 Jan, 2026.

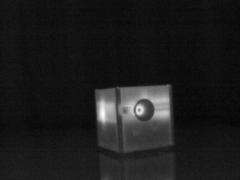

Spacecraft Thermal Infrared (STIR) Dataset

The Spacecraft Thermal Infrared (STIR) Dataset is used primarily to train machine learning-based pose determination methods applied to spacecraft proximity operations. STIR consists of 16,641 thermal infrared pictures of a free-floating spacecraft target platform, captured at Carleton University’s Spacecraft Proximity Operations Testbed by an ICI-9320 thermal camera installed on the chaser spacecraft platform. For each of the frames, the relative planar three-degree-of-freedom pose of the target with respect to the chaser is included (x and y positions, and yaw angle). This ground truth data was acquired by a PhaseSpace motion capture system with submillimeter accuracy.

The Spacecraft Thermal Infrared (STIR) Dataset is used primarily to train machine learning-based pose determination methods applied to spacecraft proximity operations. STIR consists of 16,641 thermal infrared pictures of a free-floating spacecraft target platform, captured at Carleton University’s Spacecraft Proximity Operations Testbed by an ICI-9320 thermal camera installed on the chaser spacecraft platform. For each of the frames, the relative planar three-degree-of-freedom pose of the target with respect to the chaser is included (x and y positions, and yaw angle). This ground truth data was acquired by a PhaseSpace motion capture system with submillimeter accuracy.

Budhkar, A., and Ulrich, S., “Spacecraft Thermal Infrared (STIR) Dataset,” Federated Research Data Repository, 2025. https://doi.org/10.20383/103.01333.

Related Publications

AAS/AIAA Space Flight Mechanics Meeting, Kaua’i, HI, 19-23 Jan, 2025.

Satellite Segmentation (SATSEG) Dataset

The SATellite SEGmentation (SATSEG) dataset is used to benchmark segmentation methods applied to space-based applications. SATSEG consists of 100 color and grayscale pictures of actual spacecraft, and laboratory mockup, captured by visual and thermal cameras. Some of the spacecraft in this dataset include: Cygnus, Dragon, ISS, Space Shuttle, Cubesats, Hubble, Orbital Express, and Radarsat. Also included in SATSEG is manually-produced ground-truth data that provides a binary mask for each image (foreground = 255 / background = 0).

The SATellite SEGmentation (SATSEG) dataset is used to benchmark segmentation methods applied to space-based applications. SATSEG consists of 100 color and grayscale pictures of actual spacecraft, and laboratory mockup, captured by visual and thermal cameras. Some of the spacecraft in this dataset include: Cygnus, Dragon, ISS, Space Shuttle, Cubesats, Hubble, Orbital Express, and Radarsat. Also included in SATSEG is manually-produced ground-truth data that provides a binary mask for each image (foreground = 255 / background = 0).

Shi, J.-F., and Ulrich, S., “Satellite Segmentation (SATSEG) Dataset”, https://doi.org/10.5683/SP3/VDAN02, Borealis (Ed.), 2018.

Related Publications

Shi, J.-F., and Ulrich, S., “Uncooperative Spacecraft Pose Estimation using Monocular Monochromatic Images,” AIAA Journal of Spacecraft and Rockets, Vol. 58, No. 2, 2021, pp. 284–301.

AIAA SPACE and Astronautics Forum and Exposition, AIAA Paper 2018-5281, Orlando, FL, 2018.