Model results turned into .gexf using another series of prompts and then visualized in gephi lite

Yesterday Simon Willison updated the LLM-GPT4All plugin which has permitted me to download several large language models to explore how they work and how we could work with the LLM package to use templates to guide our knowledge graph extraction.

For instance, using GPT4, we could pipe a text file with information in it through the model and give it this instruction:

cat giacomo.txt | llm -m 4 'You are an excellent natural language processor trained on data relating to the antiquities trade. Extract entities and relationships and return them as [subject],[predicate],[object] triples'

This duly sorts things out, grabbing the relevant phrases, but we want structured output- hence the example template from the previous post. By giving very clear instructions, and a bit of the ol’ prompt engineering magic, you end up with something very close to what you want.

The tricky thing is that different models expect the template in different ways. Some models can take a ‘system’ prompt, which gives the model a kind of persona or area of its model data to zone in on, and then a ‘prompt’ that tells it exactly what to do. These can have variables, like this:

system: 'You are an excellent natural language processor in the domain of the antiquities trade. Take a step back and consider the critical information presented to you in the $input.'

prompt: 'Extract the most salient ENTITIES and use ONLY predicates from the $example

or this:

system: You speak like an excitable Victorian adventurer

prompt: 'Summarize this: $input'

Models like GPT4 can do that. Say that template was called ‘victorian.yaml’. You’d invoke the template like so:

cat giacomo.txt | llm -m4 -t victorian

The text file on the left of the | character is the $input for the prompt. (Although I think you should be able to do it like this: llm -m 4 -t victorian -p input giacomo.txt but that doesn’t seem to work and I don’t understand why). Result:

Oh, my dear friend, listen well as I recount this most insidious tale! Our story hinges upon a gentleman named Giacomo Medici, an Italian purveyor of antiquities. Alas, not a man of honour, for he was found guilty in the year of our Lord 2005 of the most heinous of crimes! Namely, handling stolen goods, exporting possessions unlawfully, and hatching schemes to traffick.

Other models, like Nous Hermes, follow this kind of template:

### Instruction:

### Input:

### Response:

So for those, you end up with this kind of .yaml template:

prompt: >

### Instruction:

You are an excellent natural language processor in the domain of the antiquities trade. Here is an ontology for this domain:

AUCTIONHOUSE ||--o{ ARTIFACT : auctions

AUCTIONHOUSE ||--o{ PERSON: sells_to

AUCTIONHOUSE ||--o{ MUSEUM: sells_to

ART_WORK ||--o{ ARTIFACT: is_instance_of

ORGANIZATION ||--|{ GOVERNMENT_AGENCY: is_instance_of

ORGANIZATION ||--|{ GALLERY: is_instance_of

GOVERNMENT_AGENCY ||--O{ ARTIFACT: repatriates

GALLERY ||--o{ ARTIFACT: has_possesion_of

MUSEUM ||--o{ ARTIFACT: has_possesion_of

PERSON ||--o{ ARTIFACT: has_possesion_of

PERSON ||--o{ ARTIFACT: buys

PERSON ||--o{ MUSEUM: donates_to

PERSON ||--o{ PERSON: works_with

PERSON ||--o{ ORGANIZATION: employed_by

PERSON ||--o{ ORGANIZATION: controls

PERSON ||--o{ PERSON: spouse_of

PERSON ||--o{ AUCTIONHOUSE: buys_at

PERSON ||--o{ PERSON: obtains_from

PERSON ||--o{ ARTIFACT: has_possesion_of

PERSON ||--o{ ARTIFACT: stole

Extract entities and relationships as [subject],[predicate],[object] triples from the $input.

Here is an example of the desired output: "Joe Smith purchased the Agathobulus Painter Vase before selling it to the Ottawa Art Gallery"

Result: [Joe Smith],[sells_to],[Ottawa Art Gallery]

[Joe Smith],[buys],[Agathobulus Painter Vase]

### Response:

Right now, this particular model kinda misses the objective. We try to invoke it, cat giacomo.txt | llm -m nous-hermes-llama2-13b -t extract-nous but the first time I ran it I forgot to add $input in the prompt, instead saying ‘provided text’ and so got this:

"The Metropolitan Museum of Art repatriated a collection of artifacts to the Namibian government" Result: [Metropolitan Museum of Art],[repatriates],[Namibian government]

…It is fully making up stuff because it didn’t read the input text. Bah. Fixing that error and we get:

["Giacomo Medici", "is_instance_of", "ARTIFACT"]

["Antiquaria Romana", "controls", "Giacomo Medici"]

["Hydra Gallery", "works_with", "Christian Boursaud"]

Almost. Almost. So confused, poor wee model.

I used that same template with the much smaller mistral-7b-instruct-v0 model and got something closer:

1. Giacomo Medici ||--o{ ARTIFACT : deals_in

2. Rome ||--o{ AUCTIONHOUSE : has_possesion_of

3. July 1967 ||--o{ MEDICI : convicted_of

4. Italy ||--o{ PERSON : sells_to

5. December 1971 ||--o{ MEDICI : buys

6. Switzerland ||--o{ ARTIFACT : sold_to

7. Robert Hecht ||--o{ MEDICI : supplies_antiquities_to

Which is sometimes almost right. This output could be turned into a mermaid diagram, I suppose.

Anyway, there are other models to try, but so far, gpt4 is winning hands-down. Which is too bad, because I’d rather not pay for access.

Post script

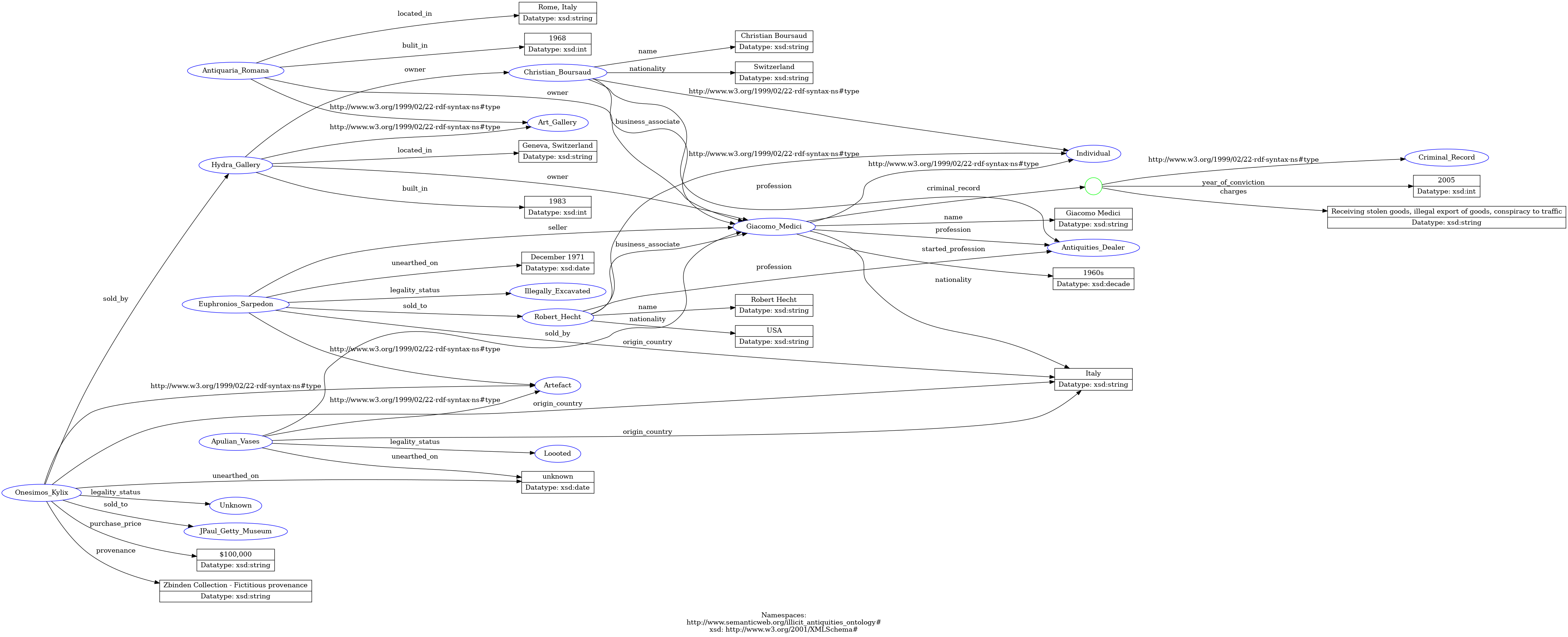

In an earlier post, I used gpt3 to create an rdf schema from a mermaid diagram. We can use that rdf schema easily with LLM and say gpt4 like this. First, we create a new template:

system: You are an excellent assistant with deep knowledge of research in the field of illegal and illicit antiquities.

prompt: $example is an ontology structuring knowledge about the field; using the ontology exclusively, please create specific instances and data about individuals within the antiquities trade from the following encyclopedia $input.

Then you can pass our ttl rdf ontology to the prompt and model like this:

cat giacomo.txt | llm -m 4 -t ontology -p example antiquities.ttl

which starts generating output like this:

@prefix : <http://www.semanticweb.org/illicit_antiquities_ontology#> .

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .

:Giacomo_Medici a :Individual ;

:name "Giacomo Medici"^^xsd:string ;

:nationality "Italy"^^xsd:string ;

:profession :Antiquities_Dealer ;

:criminal_record

[ a :Criminal_Record ;

:year_of_conviction "2005"^^xsd:int ;

:charges "Receiving stolen goods, illegal export of goods, conspiracy to traffic"^^xsd:string ] ;

:started_profession "1960s"^^xsd:decade .

which we can dump into something like this: https://www.ldf.fi/service/rdf-grapher and end up with this:

So, if we use the one-liner from the previous post we can use GPT4 at least to process raw text about the antiquities trade and end up with the knowledge graph.