Research Software Development

The Research Software Development team works with researchers to develop purpose-built software based on their needs in order to further their research programs. If you believe this service would be of value to you, please contact RCS. If you use the any custom software produced with the help of the Research Software Developement team as a part of your published research, it would be greatly appreciated if you could acknowledge RCS in your publication. Note: the Research Software Development team reserves the right to publicize any project on which they participate.

Support and Maintenance

If the intended research development project will be running as a service hosted by Carleton University, the underlying systems that ITS already supports (VM, operating system, database, webservers, etc.) will follow ITS’ current maintenance schedule. The support for underlying systems hosted outside of Carleton or those that Carleton does not support will fall to the host provider or the researcher to perform regular software updates.

The software development team will work closely with the researcher during development to ensure all of the project goals are completed. Once the software is formally handed over to the researcher, support and maintenance in the form of bug fixes, feature improvement, etc. will be done on a best-effort basis and by request only. To allow for the Research Software Development team to provide long term support to researchers long after the software was initially developed, the team will stick to a core set of relevant technologies and fully document each project to allow for easier knowledge transfer.

Software Licensing

Software for research is highly reusable and producing well-architected, well-written, fully tested, and properly documented code not only helps the individual researcher but contributes to the research community as a whole. In this spirit, the code generated by the Research Software Development team will be licensed under the GNU General Public License, a Free and Open Source Software (FOSS) licence whenever possible and appropriate. Once a project has reached a suitable state, the code will be released on the public RCS Github page.

Projects

A list of projects contributed to by the Research Software Development team, along with links to the publicly available open source code, is given below. Please click each project for more information.

- Conservation Decision Tools – Prof. Joseph Bennett (Department of Biology)

In Canada and elsewhere, biological diversity – and the many cultural, economic and aesthetic benefits it provides us – is under threat. Protecting and carefully managing land is the most important thing we can do to reduce this threat. However, decisions of where and how to protect and manage lands are always difficult, especially where high biodiversity overlaps with many competing interests. Prof. Joseph Bennett from the Department of Biology is partnering with a Nature Conservancy of Canada (NCC) team led by Dr. Richard Schuster to co-design user-friendly decision support tools for land acquisition, stewardship and monitoring. NCC will use these tools to make its acquisition and stewardship planning more efficient, and to harmonize its goals and processes nationally. The team is also developing public-facing tools that can be used by government agencies, municipalities and land trusts to help manage their land use.

The Research Software Development team has supported this research by enhancing existing widgets and implementing new widgets for the front-end of the Conservation Decision Tools, a user-friendly platform for land acquisition, stewardship, and monitoring. The front-end is built upon R Shiny. Technologies used include: JavaScript (including the jQuery and D3.js – Data-Driven Documents libraries), CSS (including Bootstrap) and HTML.

- Using Machine Learning for Building Energy Consumption Estimation – Prof. Scott Bucking (Department of Civil and Environmental Engineering)

-

Introduction

Estimation of energy consumption is an important factor in designing efficient buildings. Physics-based simulations allow engineers to determine the best parameters for energy efficiency based on different environmental factors. For example, the number of windows on a given wall, the relative position of the building to the sun, the type of heating and cooling systems, the efficiency of windows, and others. In addition, each building behaves differently when exposed to variable weather conditions and geographic locations. The hourly energy consumption estimation is not only important for designing a building, but also to estimate energy consumption in different climate conditions that may arise. Scaling up the simulations allows energy providers to plan power distribution for entire cities under different weather scenarios, therefore decreasing downtime and improving service quality.

While Physics-based simulations provide good-quality energy consumption estimation, they tend to take a significant amount of time to run, and with the changes in parameters and weather constraints, the time required to estimate hourly energy consumption can be impractical. In this project, the use of machine learning models for hourly energy estimation was explored using two approaches: a deep neural network, and a hybrid model using a Long Short-Term Memory (LSTM) approach. When compared to physics-based simulations, both models achieved similar results, with low mean squared error, and faster simulations.

Methodology

Dataset

Simulations using energy plus software were conducted with several variable building parameters and weather conditions. The output of these simulations, the estimated hourly energy consumption, was then used as the gold standard for energy consumption. A few parameters from the energy plus simulations were used to estimate the energy consumption using machine learning models. The results were compared with the physics-based simulations using the mean squared error to determine model performance.

Models

Traditional Feed-Forward Deep Neural Network

When designing a feed-forward deep neural network, there are several parameters to define the architecture of the model. First, the number and type of input neurons must be determined, as well as the number of output neurons. In the case of predicting hourly energy consumption in buildings, the output is a single neuron representing the predicted energy consumption in kilojoules for a given hour. The input neurons, on the other hand, consist of building parameters and weather data.

The building parameters contain various types of data. For instance, the insulation R-value is a floating-point value, the building orientation is measured in degrees, and other parameters, such as window type, are categorical, with categories like single-pane or double-pane. To feed these parameters into the network, numerical values are scaled between 0 and 1, while categorical parameters are encoded using a technique called one-hot encoding. Scaling the parameters is crucial to improve training speed and stability.

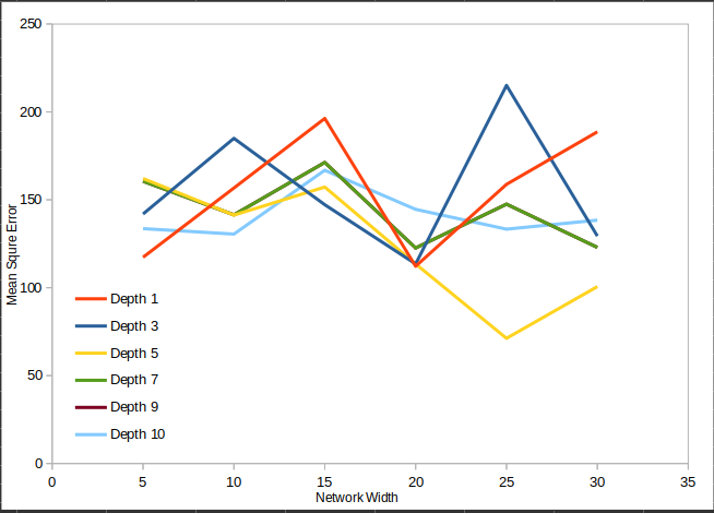

Once the input and output neurons have been decided upon, the layout of the hidden layers must be determined. For this project, it was decided that each hidden layer would contain the same number of neurons. The number of neurons in a layer is known as the width of the layer, while the total number of hidden layers is called the depth of the network. To determine the optimal width and depth, a grid search was performed for depth values between 1 and 10 and widths between 5 and 30. The mean square error between a test set of simulated hourly energy usage and the predicted usage was calculated and used as the figure of merit for determining which architecture was optimal. The results of which can be seen in Figure 1.

Figure 1: Results of the grid search used to determine the optimal network architecture.

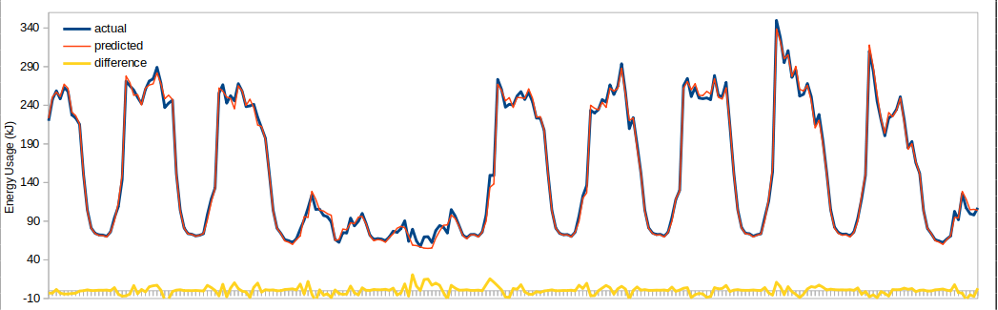

Figure 1: Results of the grid search used to determine the optimal network architecture.The optimal network architecture was found to have a depth of 5 and a width of 25. A sample of the predicted hourly energy usage compared to the energy usage calculated by the physics-based simulation is shown in Figure 2.

Figure 2: Sample of predicted vs. simulated hourly energy consumption for a 10 day period.

Figure 2: Sample of predicted vs. simulated hourly energy consumption for a 10 day period.The recurring pattern apparent in the data is the result of daytime versus nighttime as well as workdays versus weekend. The 8 large peaks correspond to workdays when people would be in the building and the climate control system would be set to a comfortable temperature. The sort valleys between working hours and the large value corresponding to the weekend show lower energy consumption since the temperature inside the building would be set lower to conserve power.

Hybrid model

LSTM models can find patterns considering past values, which is important when using time-series data such as weather conditions. The hybrid model used a two-layer LSTM model for weather data, and a simple feedforward model for simulation parameters that are independent of time. For the LSTM, different lengths of weather data were tested. The outputs of both LSTM and simple feedforward are then concatenated and used as inputs to four dense layers with hyperbolic tangent activations. The output is the energy consumption estimation.

Results

In our exploration of different deep neural network architectures, we considered traditional feed-forward networks and a type of recurrent neural network known as Long Short-Term Memory (LSTM). Feed-forward networks, while advantageous due to their relative simplicity and thus fewer parameters require less training data. However, they cannot retain knowledge of past information, which could be beneficial for future predictions. On the other hand, LSTM networks, which possess a built-in memory, can utilize past energy usage and weather data to make more informed predictions. However, they require more training data due to their larger number of parameters.

Both models were able to estimate energy consumption closely related to the physics-based simulations. Figure 2. shows the actual (physics-based) and predicted (machine learning) energy usage over 10 days using the feed-forward model, as well as the difference between the two. While both models resulted in close energy estimation, the feed-forward model requires less computation time when compared with the hybrid model because of the LSTM layers. It leveraged additional features computed separately, such as day and hour of the week. On the other hand, the hybrid model used only the raw time-series values, being able to find patterns in weather parameters instead. Both models proved to be satisfactory for building energy estimation with faster simulation times when compared to physics-based simulations.

Conclusion

Predicting energy consumption in buildings is not only important for designing buildings but also for energy providers to plan and adapt according to different weather conditions. On a large scale, building physics-based simulations requires large amounts of computational power, which might prove difficult. Using machine learning models such as feed-forward networks, or hybrid models like the ones discussed in this project, will speed up the estimation of energy consumption, allowing energy providers to better adapt to client’s demands.

- A Cultural Typology of Vaccine Misinformation – Prof. Michael Christensen (Department of Law and Legal Studies) & Prof. Majid Komeili (School of Computer Science)

Misinformation related to public health advice is a problem that social media companies have tried to address throughout the pandemic. Despite efforts to take down or block obviously false information, misleading or inaccurate information about the COVID-19 vaccines has continued to proliferate. Our project tries to understand this problem by moving beyond an approach that treats online discourse about vaccines in a true/false binary. By collecting and analyzing Twitter data, our project identifies some of the underlying cultural themes that are most often associated with questionable information about vaccines. The study combines qualitative annotations of individual Tweets with automated text analysis to decipher the key cultural themes driving vaccine misinformation.

The Research Software Development team has supported this research by designing and implementing the twitter annotator platform as a modern web application. This modern and secure web application is a platform for collecting and analyzing Twitter data, and combines qualitative annotations of Tweets. Technologies used for the web application include: JavaScript (including React and Redux), Python (including Flask) and Keycloak.

Source code:

- News-based Sentiment Analysis – Prof. Ba Chu (Department of Economics)

Prof. Prof. Ba Chu from the Department of Economics at Carleton University is interested in using Natural Language Processing, a type of artificial intelligence, and print media to develop insights into stock market dynamics. He has collected a large textual data set (over 2TB) containing all free-subscription online newspapers in English collected over the period from 2016 to 2022. Before this vast trove of data could be utilized it first needed to be analyzed for useful articles and organized into collections depending on research goals. For one research project Prof. Chu was interested in studying sentiment in the United States at the state level. The RCS team assisted by developing tools to automate web searching for lists of local state newspapers. The list of local newspapers was then used to sort articles in his collection by state. For another project, Prof. Chu was interested in looking for articles which commented directly on specific company’s stocks. For this the RCS team developed efficient search algorithms to identify articles which contain one or more stock symbols from a list of over 19 thousand possibilities. Had a brute force search been employed, the search would have taken an exorbitant amount of time. By capitalizing on specific patterns in the news reports on stocks and distributing the task over multiple processors the search time was substantially reduced. The main technologies utilized for these project were the Python (including the Beautiful Soup library which enabled web-scraping of state newspaper directories).

Source code:

- Aptamerbase - Prof. Maria DeRosa (Department of Chemistry)

Aptamers are short DNA or RNA sequences that are able to recognize and bind to target molecules with high affinity and specificity. The process for discovering new aptamers, called SELEX, has been very successful, however the conditions under which the experiment is run can vary from researcher to researcher and may have a dramatic impact on aptamer properties. The purpose of this project, led by Prof. Maria DeRosa from the Department of Chemistry, was to develop a database for housing aptamer selection experiment conditions and to allow for querying of these conditions to help unravel their effect on aptamer properties. This resource is available online at aptamerbase.carleton.ca.

The Research Software Development team has supported this research by designing and implementing the “Aptamerbase” database platform as a modern web application. This modern and secure web application is a database management system for housing and managing the Aptamer data. Technologies used for the web application include: JavaScript (including React and Redux), Python (including including Flask, SQLAlchemy and Marshmellow), PostgreSQL and Keycloak.

Source code:

- Text Data Mining - Prof. Ahmed Doha (Sprott School of Business)

The text data mining project, lead by Prof. Ahmed Doha of the Sprott School of Business, aims to collect the text of research papers from journals contributing to the field of business and management. This data to create ontologies and data models that can help researchers make more effective and efficient use of this data in applications like literature review assistance.

The Research Software Development team has supported this research by writing an application to download article information (full text pdfs, various pieces of metadata) for specific publishers from Crossref, an online repository for research publication information. The code uses the researcher’s ORCID credentials to allow access to this data. The source code for this project can be found on our public Github page below. Technologies used include: Python (including the Beautiful Soup, Pandas and Requests libraries).

- Disclosure of AI Development and Application by North American Companies - Prof. Maryam Firoozi (Sprott School of Business)

Artificial intelligence (AI) is on the rise with many countries around the world investing greatly in AI to boost their economies. However, many experts caution that this rapid growth in development and application of AI in every aspect of life, without properly mitigating its risks may eventually have devastating consequences for humanity. The for-profit sector, which is playing a huge role in development and application of AI is mainly unregulated on AI accountability. The purpose of this study, lead by Prof. Maryam Firoozi of the Sprott School of Business, is to understand if and how firms in North America disclose information related to development and application of AI and whether this information has any substance. The outcome of this research will advance our understanding of AI disclosure as a mechanism for AI accountability.

The Research Software Development team has supported this research by developing a Python script that takes as input a list of websites and a list of keywords. Each website in the list is crawled and searched for the occurrence of each keyword. The script works by first downloading the HTML of a website. The HTML is then searched for text and links. The text is searched for occurrences of the keywords, and the links are checked against a list of already visited sites. For each new link found, the process is run again. In this way all the links within the website’s domain are crawled and searched. By implementing this search in parallel, the RCS team reduced the execution time from days to hours. Moreover, this script has eliminated the daunting and time-consuming task of manually inspecting the websites for keywords. The source code for this project can be found on our public Github page below. Technologies used include: Python (including the requests_html, Pandas, urllib, and PyPDF2 libraries).

Prof. Firoozi encountered further challenges with this research. Extracting text from highly structured PDFs in proper reading order can be challenging. Highly structured documents contain text in columns of various shapes and widths, as well as background and font colours. Extracting text directly from the file can suffer from improper ordering of text blocks, and intentional obscurification from the document author. Other techniques such as optical character recognition (OCR), though able to deal with intentional obscurification, can not cope with varying widths and shapes of text columns, or font and background colours. To overcome these issues this the RCS team implemented a tool that uses a mixture of OCR and classical computer vision segmentation techniques to extract text from highly structured documents in proper reading order. Technologies used include: Python (including the Tesseract and OpenCV libraries.

- PermafrostNet - Prof. Stephan Gruber (Department of Geography and Environmental Studies)

Permafrost underlies more than one-third of the Canadian land surface. Most of this is expected to experience significant loss of subsurface ice over the next 100 years and beyond, leading to irreversible landscape transformations, design and maintenance challenges for infrastructure and threats to the health of northerners. The NSERC Permafrost Partnership Network for Canada (NSERC PermafrostNet), lead by Prof. Stephan Gruber from the Department of Geography and Environmental Studies, will develop better spatial estimates of ground-ice content and geotechnical characteristics, improve representation of key phenomena and processes in models, and provide simulation results at scales commensurate with observations.

The Research Software Development team has supported this research by designing and implementing an interactive browser for permafrost data. This modern and secure web application provides access and visualizations of data sets from a variety of sources and offers a shared set of tools for the permafrost research community. This resource can be accessed at pdsp.permafrostnet.ca. The source code for the various aspects of this project can be found on our public GitHub page at the links below. Technologies used include: JavaScript (including React and Redux), Python (including Flask, SQLAlchemy and Marshmellow), PostgreSQL and Keycloak.

Source code:

- LabVIEW Data Visualization - Prof. Matthew Johnson (Department of Mechanical & Aerospace Engineering)

The Energy & Emissions Research Lab (EERL), lead by Prof. Matthew Johnson in the department of Mechanical & Aerospace Engineering, is developing an innovative methane sensor, known as the VentX, to quantify emissions from key oil and gas sector sources that are currently challenging to measure, and hence challenging to regulate. Funded by Natural Resources Canada’s (NRCan) Clean Growth Program, the VentX sensor uses a tunable diode laser to optically measure the transient flux of methane from critical sources such as well casing and storage tank vents. The optical measurement approach permits field measurements within hazardous locations, allowing acquisition of quantitative data necessary to design mitigation solutions, to calculate carbon credits, and ultimately to reduce emissions. Data from the VentX are streamed wirelessly outside the hazardous zone, allowing real-time monitoring by multiple personnel at once using cellular phones or laptops.

The Research Software Development team has supported this research by designing and implementing a desktop and mobile web front end for the sensor, displaying real-time data in a user-friendly way to web-connected phones in the field. The sensor physically interfaces with LabVIEW, a systems that allows developers to retrieve real-time data from the sensors. The front end is built upon LabVIEW and displays 1Hz results, which include velocity, methane concentration, and methane emissions rate. Technologies used include: Bootstrap and JavaScript (including the jQuery and D3.js – Data-Driven Documents libraries).

- MathLab Migration - Prof. Jo-Anne Lefevre (Institute of Cognitive Science and Centre for Applied Cognitive Research)

The MathLab, under the directorship of Prof. Jo-Anne Lefevre from the Institute of Cognitive Science and Centre for Applied Cognitive Research, conducts research into the cognitive processes involved in numerical and mathematical abilities. Research projects are designed to explore different aspects of mathematical cognition from its development across different ages and cultures to understanding the basic cognitive processes and numeracy skills such as the retrieval and representation of mathematical knowledge. The lab receives funding from NSERC and SSHRC, and collaborate with researchers in Montreal, Winnipeg, Belfast, and Santiago, Chile. Using HubZero, and now Microsoft Teams, has facilitated our collaborations. It helps us to keep track of the materials used in the research, provides a secure online repository for de-identified data, and allows direct collaboration as we write manuscripts. We can post drafts of articles, work on them together, or sequentially, post comments and replies. As the use of Microsoft Teams increases, the lab is finding new ways to be more efficient in their joint projects. The MathLab site includes data from several large projects, each with several hundred participants. The Lab currently has researchers from six different universities, including more than 30 people, including faculty members, postdocs, graduate students, and undergraduate students all part of the MathLab Team on Microsoft Teams.

The Research Software Development team has supported this research by migrating the entire online repository for all of the lab’s research projects from a singular and unsupported content management system based on HUBZero to Microsoft Teams, a platform supported centrally at Carleton. Software Development work comprised source code for a tool available on GitHub. This command-line tool provides a mechanism to bulk-import a list of Teams members into Teams private channels, which was manually exported from the HUBZero database. Technologies used include: Python (including the Pandas and Requests libraries).

- AI-assisted Textual Data Extraction for CEO Personality Analysis - Prof. Sana Mohsni (Sprott School of Business)

Corporations deliver information to the public in various ways, such as through Security and Exchange Commission (SEC) filings, shareholder meetings, and Q&A sessions with C-Suite members. Researchers can study these sources of information to gain insights into the functioning of these corporations.

Prof. Sana Mohsni and her PhD Student Awais Mojai from the Sprott School of Business focus their research on environmental, social, and governance (ESG) investing done by corporations. ESG investing refers to the consideration of environmental, social, and governance factors in the investment decision-making process. Investors who prioritize ESG criteria seek to support companies that are environmentally responsible, socially equitable, and governed transparently and ethically.

The researchers have found that a fruitful source of information on corporate ESG investing is the Q&A sessions held at shareholder meetings. Specifically, he is interested in the responses given by the CEO. Transcriptions of these sessions are available from companies such as Bloomberg and Refinitiv. However, there are thousands of these transcripts available, making it impossible to cover all the material manually. The task is further complicated by the dual source of transcripts, Bloomberg and Refinitiv, each with their own style and formatting which varied slightly from year to year. Finally, not all Q&A sessions included CEO participation. These additional complications prevent the use of a simple Rules-based approach to the data extraction.

This is the perfect use case for Document AI. Document AI is a collection of machine learning techniques that automate the analysis and extraction of information from large volumes of text documents. It leverages machine learning and natural language processing to understand and process the content, enabling researchers to handle vast amounts of data efficiently.

To assist in this research, the RCS implemented a Document AI analysis pipeline to automate the extraction of CEO answers from the vast collection of Q&A session transcripts. This pipeline was designed with two crucial steps to ensure precision and efficiency. The first step involved identifying the CEO’s name from the list of Q&A panelists, if one was present, from diverse and varied document formats. The second step scanned through the remainder of each document to select only the responses provided by the CEO, including those which were split over multiple pages, filtering out any irrelevant information (such as page headers and footers) or responses from other executives.

By implementing this Document AI pipeline, the RCS transformed what was once an overwhelming and time-consuming task into a streamlined and manageable process. This automation not only saved countless hours of manual labour but also ensured a higher degree of accuracy and consistency in the data extracted. As a result, this research on corporate ESG investing became significantly more efficient and effective, allowing the researchers to focus on analyzing the insights rather than getting bogged down by the tedious task of data extraction.

- SigLib - Prof. Derek Mueller (Department of Geography and Environmental Studies)

SigLib stands for Signature Library and is a suite of Python scripts to query and manipulate Synthetic Aperture Radar (SAR) satellite imagery. SigLib works on Windows, Mac and Linux and relies on standard geospatial open-source libraries such as PostGIS and GDAL/OGR to query and manipulate imagery and metadata. At present, SigLib supports RADARSAT-1 (2 major file formats), RADARSAT-2, and Sentinel-1 imagery. Support for RADARSAT Constellation Mission (RCM) will be added in the future.

SigLib and the underlying SigLib API is useful for anyone who wants to:

- find and download lots of SAR imagery

- generate a ‘stack’ of images of a specific region over time, in a consistent way

- generate a library of SAR signature (backscatter) statistics over both space and time

- automate the process of querying, downloading, and manipulating SAR data

- keep track of all the SAR data and associated metadata they have on their computer

SigLib is an initiative of the Water and Ice Research Laboratory (WIRL) in the Department of Geography and Environmental Studies. It will be released on Github soon as an open-source library.

The Research Software Development team supported this research by implementing the functionality of the Query mode of SigLib. This mode is used to query and download SAR imagery from EODMS (RADARSAT-1; RADARSAT-2 and RCM, for those with access) and Copernicus Hub (Sentinel-1). It can also extract metadata from local files to create a local database table of imagery to query against. Technologies used include: Python (including the psycopg2, GDAL, configparser, pandas, geopandas, sentinelsat, and eodms-api-client libraries.

- Rome from the Ground Up - Prof. Jaclyn Neel (Greek and Roman Studies)

“Rome from the Ground Up” is a SSHRC-supported YouTube social network analysis project led by Prof. Jaclyn Neel from Greek and Roman Studies. The aim of this project is to discover popular opinion about ancient Rome by analyzing amateur YouTube comments, video contents, and communities. These popular media are also compared to source material in ancient texts, modern textbooks, and mainstream fiction.

The Research Software Development team supported this research by implementing a suite of python scripts that collects metadata about a set of videos and their creators using the publicly available YouTube Data API v3.0. It also grabs videos’ comments and metadata about its commenters. Merging different types of information, the program builds a network composed of creators, videos, and commenters. This network can be visualized in the data visualization tool Cytoscape. The set of input videos can be given as a URL playlist or this set can also be retrieved by a YouTube query search using the implemented script. Technologies used include: Python (including the pandas library) and the YouTube API v3.0.

Source code:

Prof. Neel presenting preliminary results using the tools implemented here:

- Language2Test - Prof. Geoff Pinchbeck (School of Linguistics and Language Studies)

Language2Test is a language testing and teaching/learning platform, envisioned by Prof. Geoff Pinchbeck from the School of Linguistics and Language Studies, that will allow multi-site language acquisition research to be conducted and low-stakes diagnostic language testing to be administered. University student demographics in North America have changed dramatically and now include larger numbers of bilingual and multilingual students from diverse backgrounds, including international students, recent immigrants, as well as long-term residents. Commercially available language tests used for university admissions are expensive and do not provide diagnostic information. Language2Test allows language acquisition research to be scaled up which affords a more nuanced examination of individual factors, such as first language effects, previous education, and home literacy practices. The suite of test instruments will monitor reading and writing proficiency as well as vocabulary and grammar knowledge of bilingual and multilingual individuals in English and in several other languages over time. Ultimately, this research will inform advances in academic language pedagogy and provide insights into the linguistic aspects of academic subject education in general. The beneficiaries of this research will include post-secondary programs for international students (‘English for Academic Purposes’ programs) and language programs for new-comers to Canada (e.g., ‘LINC’ and ‘ESL’), French-immersion and bilingual programs in K-12 schools in Canada and elsewhere. This resource can be accessed online at language2test.carleton.ca.

The Research Software Development team has supported this research by designing and implementing the Language2Test platform as a modern web application. This project is still currently under development. Technologies used include: JavaScript (including React and Redux), Python (including Flask, SQLAlchemy and Marshmellow), PostgreSQL and Keycloak.

Source code:

- Corpus Text Processor - Prof. Geoff Pinchbeck (School of Linguistics and Language Studies)

Prof. Geoff Pinchbeck from the School of Linguistics and Language Studies has compiled a corpus of text files of closed-captions and subtitles for 50,000+ TV programs and movies. This corpus is an approximation of informal and conversation English speech which will be made available as a database for two types of linguistic searches. First, Prof. Pinchbeck’s lab plans to conduct corpus linguistics research for which this type of corpus will be a useful source of data. Second, teachers and learners of English will be able to find examples of how English is used in TV and movie scripts; this is a method of language pedagogy that is termed “Data-Driven Learning” in the research literature, and some websites (e.g., the Contemporary Corpus of American English, COCA) charge to provide such a service. Although the source software for the ‘Corpus Text Processor’ was already available as a convenient, free, downloadable application for MacOS or Windows from Corpus & Repository of Writing – ‘CROW’, the advantage of making this technology available as a command line tool allows very large corpora (such the TV and movies corpus described above) to be processed on a university VM server. If a command line version wasn’t available, processing a corpus of this size on a personal computer might take several weeks or even months of intensive processing time. Prof. Pinchbeck is grateful to everyone at Research Computing Services at Carleton University for making this possible.

The Research Software Development team has supported this research by modifying the existing code to run from a Linux command-line using the Python multiprocessing library.

- Charity Insights Canada Project Website Prototype - Prof Paloma Raggo (School of Public Policy and Administration)

The Canadian charitable sector employs more than 10% of the country’s full-time workforce and is estimated to contribute more than $169 billion dollars (The Giving Report 2021), to Canada’s annual GDP. However, up-to-date data on the sector is critically scarce and urgently needed. The COVID-19 pandemic painfully highlighted this gap in knowledge when urgent policy action was needed, but recent data were unavailable to strengthen the sector’s responses to the crisis and lessen the impact on its own workforce. The Charity Insights Canada Project [CICP], led by Paloma Raggo, a faculty member in the Master of Philanthropy and Nonprofit Leadership (MPNL), School of Public Policy and Administration, will ensure that policymakers, practitioners, researchers, and the general public have accurate, timely, and comprehensive information about the charitable sector in Canada. Through weekly surveys and reports, an online interactive information and training hub, and monthly policy briefs, the CICP will offer an exhaustive overview of the trends, challenges, and opportunities facing the Canadian charitable sector.

The Research Computing Services team assisted this research study proposal by building a prototype website, which can be found HERE. This website has been built using JavaScript (including the React library) and hosted using GitHub pages.

- Atlascine Multi Maps Module - Profs Fraser Taylor and Peter Pulsifer (Department of Geography and Environmental Studies)

The Geomatics and Cartographic Research Centre (GCRC), led by Professor D. R. F. Taylor, Chancellor’s Distinguished Research Professor of International Affairs, Geography and Environmental Studies and Professor Peter Pulsifer from the Department of Geography and Environmental Studies, focuses on the application of geographic information processing and management to the analysis of socioeconomic issues of interest to society at a variety of scales, from the local to the international, and to the presentation of the results in new, innovative cartographic forms. Cybercartography is a new multimedia, multi-sensory and interactive online form of cartography and its main products are Cybercartographic Atlases which use location as a key organizing principle. These atlases create narratives from a variety of different perspectives and include both quantitative and qualitative information. They include stories, art, literature and music, as well as linguistic, socioeconomic and environmental information. The Nunaliit Cybercartographic Atlas Framework was born out of a multi-disciplinary research project in 2003 and has evolved continuously since then. It is an innovative open-source technology that facilitates participatory atlas creation and offers the means to tell stories and present research using maps as a central way to connect and interact with the data, highlighting relationships between many different forms of information from a variety of sources.

Atlascine is a unique online cartographic application based on Nunaliit. While there are a range of excellent web applications designed to tell stories with maps, Atlascine is the only one fully designed to study how we express our relationships with places through stories and to mobilize interactive maps to listen to these stories. To do so, Atlascine connects media files (i.e. video and audio) with transcripts (i.e. text) and maps via a range of tags. Atlascine is a collaborative project between The Geomedia Lab at Concordia University led by Professor Sébastien Caquard and GCRC. It has been used to develop different online atlases such as The Atlas of Rwandan Life Stories.

The Research Software Development Team supported the Atlascine project by building a new feature, called the Multi Maps Module. This module is responsible for organizing large volumes of “story data” by themes that have been assigned to them. Traditionally users would first select a story and then explore a variety of themes that it contained, but this new module would allow a user to select a theme and then explore a variety of stories that touch on this theme. This enhances Atlascine’s existing goals, allowing users to identify new patterns and structures in these stories and associate them with the places where different events took place. This new module comes with the following components: a legend, an interactive digital map, media player, media transcript and all existing CRUD functionality provided by the Nunaliit Atlas Framework. Each story associated with the theme appears in a legend located at the bottom left off the screen. When a story is toggled via the legend, locations of all the events are represented as rings of donuts on the map. These rings of donuts indicate the locations of events that have occurred for a given theme and story. Clicking a ring on the map will open and play an audio/video element (with its accompanying transcripts) starting at the very moment that the speaker is talking about the selected theme at that specific geographic location. Technologies used for this new module include JavaScript and the existing Nunaliit framework. The video below shows you a brief demonstration of the new feature and a link to the code repository containing this feature can be found here.

- WebDEVS Environment - Prof. Gabriel Wainer (Department of Systems and Computer Engineering)

The Discrete Event System Specification (DEVS) is a hierarchical and modular simulation formalism that can be used to simulate an unlimited range of real-world systems. Its flexibility comes at a cost: increased complexity when developing simulation models, less model re-usability, and lowered adoption rates. In addition, the DEVS software environment is generally fragmented and silo-ed. Tools have been developed to support model debugging or simulation trace visualization but, they are often closely coupled to simulators and their usefulness is consequently limited. This research, lead by Prof. Gabriel Wainer of the Department of Systems and Computer Engineering, seeks to design and implement a web-based modelling and simulation environment that eliminates pressure points faced by DEVS users across the simulation lifecycle.

The Research Software Development supported this research by developing a specific user interface component for the front-end of the WebDEVS Environment. This component will allow non-expert users to easily prepare a visualization for standard DEVS models. It integrates into the existing platform to offer a seamless experience to users. The component was developed using JavaScript and HTML exclusively in an effort to be as lightweight and reusable as possible.

Source code:

- Twitter Content Analysis - Prof. Stephen White (Department of Political Science)

Has social media empowered civil society, or is it contributing to the hollowing out of democracy? Around the world democracy is increasingly viewed with mistrust and disillusionment. The result has been problematic to say the least, with the traditional metrics of political participation – most notably voting, but also formal political party membership, campaign donations, involvement in community groups and general political interest – facing a severe decline throughout Europe and the Americas since the 1970s. In the case of Canada, federal electoral turnout plummeted to its lowest levels on record in 2008, reinforced by parallel trends of increasing discontent with the major political parties, a stark rise in regional and ethnic populism within party support bases, and widespread cynicism towards politics in recent years.

Yet during this same period informal political engagement online has skyrocketed. From the creation and sharing of unique content to the mobilization and articulation of ideas, the emergence of novel forms of engagement has in many ways been powered by the now ubiquitous place smart phones and social media have in contemporary life. But even as these novel forms of engagement herald new possibilities for democratic participation, novel issues such as privacy breaches and data mining, doxing, misinformation and bots herald new concerns as well. This dissertation project of Asif Hameed, supervised by Prof. Stephen White from the Department of Political Science, is a discourse analysis that seeks to examine this shift.

Using data provided by the Government of Canada’s Digital Ecosystem Research Challenge, and with the assistance of the Research Software Development team, the foundation of this project is a Twitter content analysis of tweets related to the 2019 Canadian federal election to illustrate not only the depth of e-participation on social media, but the character of that participation. By analyzing the public responses to tweets made by three different class of political information gatekeepers this project seeks to address a simple question: is this new wave of participation occurring along deliberative, democratic lines, or does it reflect the politics of what scholars are calling the post-trust era?

The Research Software Development team has supported this research by facilitating the download of millions of tweets with respect to these issues. The source code for this project can be found on our public Github page below. Technologies used include: Python (including the tqdm library).

- CAREN: Computer-Automated sRNA Engineer - Prof. Alex Wong (Department of Biology)

In bacteria, engineered short RNAs (sRNAs) are powerful tools for studying gene function. Using engineered sRNAs, researchers can tunably knock down the expression of a specific target gene, so as to determine the effects of that gene’s loss. This is particularly useful when studying genes that are essential for survival, which cannot be knocked out using traditional techniques. The purpose of this project, lead by Prof. Alex Wong from the Department of Biology, is to create software to design sRNAs targeting user specified genes, using genome sequence information as input. This resource is available online at caren.carleton.ca.

The Research Software Development team will support this research by implementing the sRNA design algorithms selected by Prof. Wong into a tool usable by researchers on the command line as well as through a web interface. Technologies used for the desktop tool include: Python (including the Biopython and Celery libraries) and BLAST. Technologies used for the web application include: JavaScript (including React and Redux), Python (including Flask, Biopython and Celery), BLAST, and Redis.

Source code:

Share: Twitter, Facebook

Short URL:

https://carleton.ca/rcs/?p=616