The term ‘unified memory’ has become more and more popular as of late and for good reason. More and more main computer components have been consolidated into a single microchip hence the term SoC – ‘System on a Chip’. For years the iGPU – ‘integrated Graphics Processor’ exist so that you can display your computer’s output without the need of a dedicated graphics card. Now a new SoC design combining CPU, GPU and main memory on a single microchip are gaining popularity and are coined as unifying the memory or ‘unified memory’.

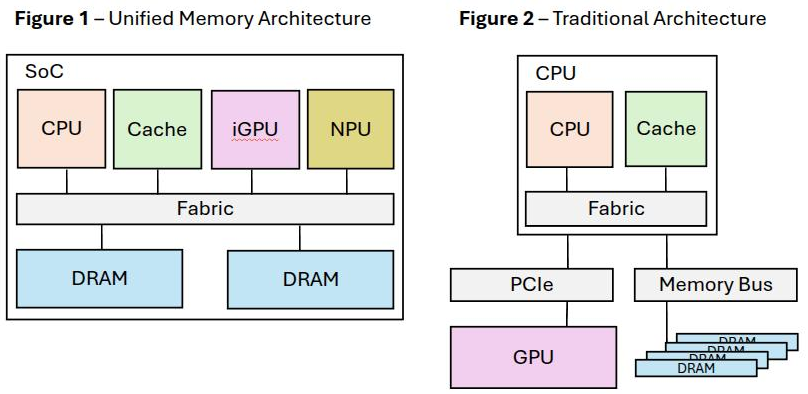

Computers with unified memory architecture feature a single shared pool of memory used by both the CPU and GPU, unlike traditional systems that have separate RAM and VRAM (see figure 1 & 2). This unified design, popularised by Apple’s M series chips, enables higher efficiency, reduced latency, and faster data transfer since the CPU and GPU work with the same memory space therefore don’t need to travel using the overhead of the bus.

Consider the CPU generations categorised as follows:

- 1st generation – 4-bit CPU

- 2nd generation – 8-bit CPU

- 3rd generation – 16-bit CPU

- 4th generation – 32-bit CPU

- 5th generation – 64-bit CPU

- 6th generation – multi-core CPU

- 7th generation – unified memory CPU

Unified memory is the next generation CPU and it will change the tech landscape. Now there will be an accessible alternative to high VRAM GPU’s. This is what it means: you can use your computer main memory as graphics memory. Is this a big deal? Yes it is.Take the NVIDIA RTX PRO 6000 Blackwell GPU it has 96 GB of VRAM and at the time of this article costs $12k CAD. 128GB (2 x 64GB) 288-Pin PC RAM DDR5 6400 (PC5 51200) Desktop Memory costs $1,200 CAD. Do the Math, this GPU is 10x more expensive.

Unified memory RAM uses DDR4 or DDR5 shared between CPU and GPU. Its bandwidth can reach around 200-800 GB/s. In contrast, dedicated GPU memory is optimized for very high bandwidth and low latency with wide interfaces 128-512 bits or more. Typical GPU VRAM bandwidth exceeds 500 GB/s, sometimes over 1 TB/s, and is directly connected to the GPU chip with minimal latency to feed thousands of CUDA cores efficiently. This difference alone can amount to a speed difference of a factor of 3-5. At this time GPU’s are faster than a unified memory GPU.

Another new development in CPU design are the use of NPU’s – Neural Processing Unit that accelerate neural networking operations part of the SoC design. These are marketed as AI CPU’s and you will be seeing more and more of them. They will help speed up the tasks performed by digital assistants like Siri or Alexa and facial recognition for secure login and lots more.

Unified memory allows AI accelerators and processors to utilise the full RAM dynamically, which is beneficial for tasks like machine learning and LLM (Large Language Model) inference. The implications are you can use your computer’s main memory as VRAM and this is the biggest benefit of a unified memory PC. In a day and age where large VRAM GPU’s are inaccessible, unified memory PC’s give you an accessible option to run large parameter LLM’s.

Here are some notable unified memory computers:

- Apple M-chip computers lead this tech. A MAC mini can cost you less than $1k CAD.

- NVIDIA DGX Spark is the famous ARM + GPU “AI Supercomputer on your desk” and that will set you back more than $6,000 CAD.

- AMD best iGPU at this time is the Radeon 890M and benchmark’s similar to an NVIDIA GTX 1070 GPU. Look for unified PC’s with AMD Ryzen AI 9 tech inside.

- Minisforum has combined the ARM CPU with their MS-R1 line of mini-PC’s. You heard that right ARM for PC – not Intel or AMD but ARM. Advertised as “the first ARM mini-PC with a BIOS”.

Terms used

- Unified memory – CPU and GPU share a single unified pool of memory

- SoC – System on Chip. Consolidates the key components of a computer or electronic system onto a single microchip.

- iGPU – integrated Graphics or graphics on the same die as a CPU

- VRAM – Video Random Access Memory is a type of dedicated memory used specifically by a graphics processing unit (GPU).

- NPU – Neural Processing Unit are specialized cores focused on accelerating neural network operations and commonly integrated into SoCs.

Author: Andrew Miles, Sr System Administrator, School of Computer Science, Carleton University