Introduction

OpenStack

Server Room Hardware

Compute Servers

GPU Compute Servers

Introduction

The SCS server room is an air-conditioned room devoted to the continuous operation of computer servers. The servers support the operational needs of the school’s faculty, staff and students. Those operational needs are typically categorised as: departmental services, teaching and research.

The main hardware components of a server room are:

- Computers

- Networking

- KVM System

- Power Management

- Racks

- Air conditioning

An efficient and scaleable way to manage the network is using a cloud architecture. The School of Computer Science uses the OpenStack cloud software to manage much of its resources.

As of April 2024 the SCS Openstack cloud is hosting:

- 54 servers

- 2,700 server vCPU cores

- 21 TB of server memory

- 325 TB of server disk space

- 123 GPU’s

OpenStack

OpenStack is a free open standard cloud computing platform deployed mostly as an infrastructure-as-a-service (IaaS). To utilize the main benefit of OpenStack it requires dedicated:

- management servers

- compute servers

- storage servers

- networking

OpenStack allows the management of all your computing resources and networking from the web-based console. Virtual servers (instances) can be launched on the compute servers. Networking is managed by the Virtual Networking Infrastructure (VNI) and this can also be done via the web console. Networking is managed using the Open Systems Interconnection model (OSI model) network layer approach. OpenStack conveniently manages your network infrastructure at the OSI layer 7 software level! There is no need to modify hardware or configure your switches, it is all controlled via the OpenStack web console!

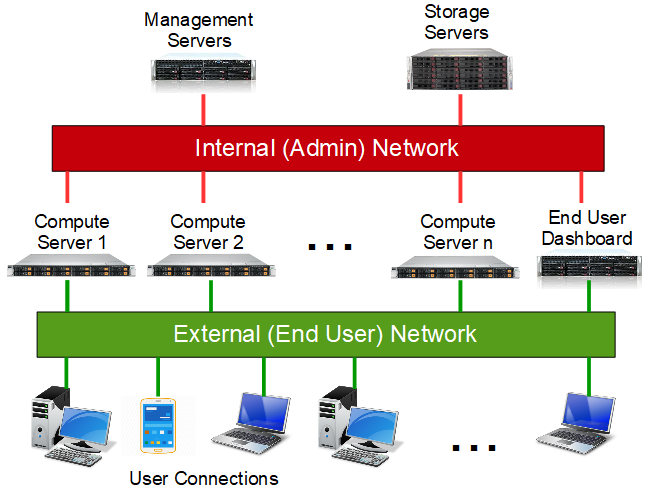

OpenStack hardware has the following structure:

The compute servers have two network connections: one for the internal (admin) network and one for the external (end-user) network. The internal network is dedicated to the functions that OpenStack requires for its operations. The external network provides connections between the OpenStack resources and the end-user. The School of Computer Science is running a 10 Gbit network for OpenStack.

Having two separate networks on each compute server means that moving large amounts of data on one network does not impact the operation of the other network. It also increases the security of the system by ensuring external users cannot access the underlying hardware (compute servers)

Find out more about SCS OpenStack:

Server Room Hardware

The server room hardware is organized on computer racks. This way the servers can be housed densely minimizing physical space. Having them close to each other simplifies connecting them. Server room servers are headless which means that you do not require a keyboard, video and mouse (KVM) for each server. The KVM connects them all to a single keyboard, monitor and mouse.

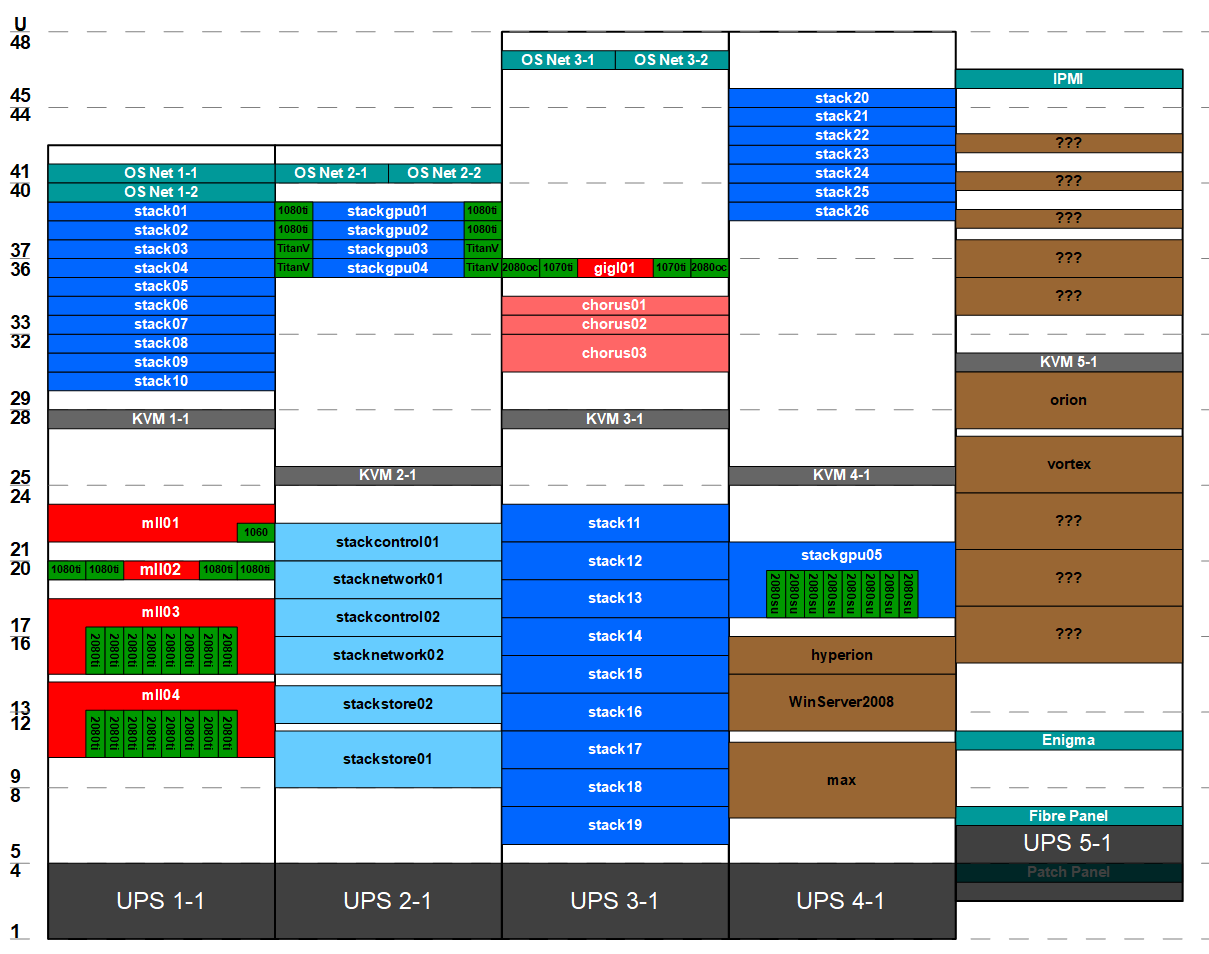

Figure 3 shows the server room components (Jan. 2021):

- Dark blue servers are the OpenStack compute nodes stack01-26. They consist of servers totalling more than 1,000 vCPUs. Each server has a dual 10G network card connecting them to the internal (admin) network and the external (end-user) network

- Light blue servers – stackcontrol, stacknetwork and stackstore – are the OpenStack management and storage servers connected together as shown in Figure 1

- Red servers (light and dark red) are research group servers

- Green GPU boxes appear inside servers, represent any available GPUs

- Dark aqua boxes found mostly at the top of the racks – many starting with

OS Net– are network switches - Brown servers are departmental infrastructure

- Gray boxes near the middle of the racks are the KVM switches. They connect the servers to a keyboard and monitor

- Dark gray equipment at the bottom of each rack is the Uninterrupted Power Supply (UPS) providing a short amount of power in case of a power failure.

Compute Servers

You can think of a server room server like your desktop PC, they have similar main components. The servers need to be packaged in a rackmount case so they can be densely housed on the rack. A typical OpenStack compute server has the following specs (as of Jan. 2021):

- CPU: Dual 16-core CPUs. 2 x 16 = 32 cpu cores with 2 threads/core. Total: 64 vCPU cores

- RAM: 16 x 32GB = 512GB (or 0.5 TB) of ECC RAM

- Disk: 4 x 2TB SSD drives (data center class)

Having four drives gives you the option of running various RAID configurations on your system. If there is a disk failure RAID will keep your server running - Network: Dual 10G networking. (OpenStack requires a minimum of 2 network ports)

- Power: Redundant 1,000W platinum level power supplies. Redundant means if the power supply fails the second power supply is used to keep the server running.

This 64 vCPU server could in theory run 64 virtual servers (instances), each having one dedicated core (with the RAM and disk space divided accordingly). However, OpenStack can over-subscribe the vCPUs, meaning you can run hundreds of virtual servers if required. RAM cannot be over-subscribed, so that is often the limiting factor. Many user applications do not saturate the CPU resources and therefore multiple users can often share CPU cores without impacting performance.

This is a major benefit of virtualization (OpenStack), it can utilize the server CPU to its full potential, something that is more difficult on a single user system. A single Openstack compute server can host more than 100 user VM’s!

GPU Compute Servers

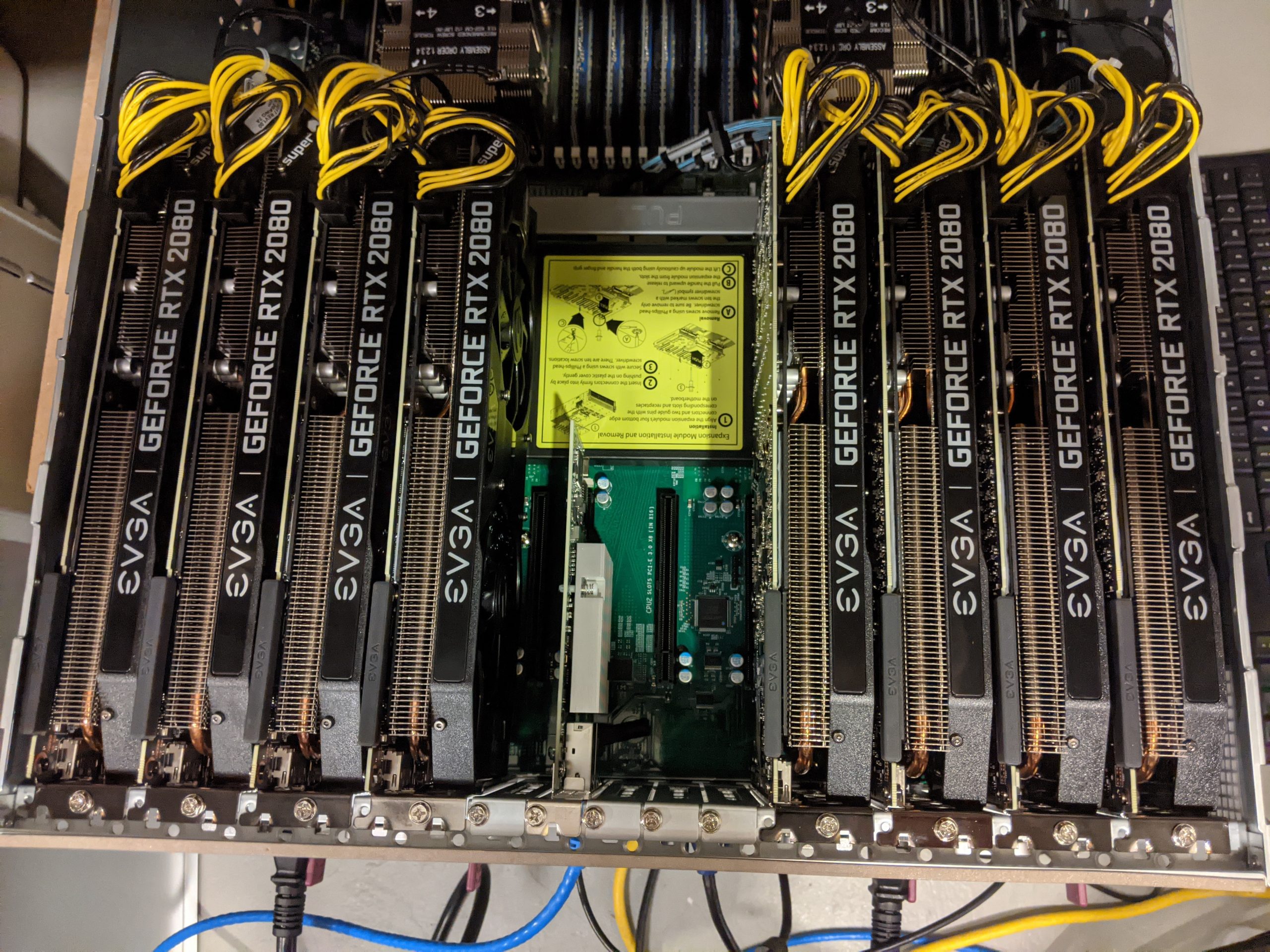

The SCS GPU compute servers are PC-based servers and can connect regular to NVIDIA gaming GPUs. Figure 4 shows a GPU server with 8 GPU cards. It is desirable to have 8 GPUs in one server because OpenStack is flexible on how to allocate those resources. Having 8 x GPUs on one hardware node gives VM options:

- Eight (8) virtual servers, each having 1 GPU

- One virtual server with 8 GPUs!

- Other combination of single and multiple GPU virtual servers

Find out more about GPU computing at SCS: